Bayesian Inference for Vector AutoRegression (VAR) Models¶

Introduction to Bayesian VAR¶

Bayesian inference provides a powerful framework for estimating the parameters of Vector AutoRegression (VAR) models, incorporating prior knowledge and uncertainty into the analysis. Unlike classical estimation methods that rely solely on the observed data, Bayesian approaches allow for the integration of prior beliefs about the parameters’ likely values, which can be particularly useful in situations with limited or noisy data.

VAR Process¶

The general formulation of a VAR(p) model can be expressed as:

where:

$ X_t $ is the vector of variables at time $ t $,

$ A_1, A_2, \dots, A_p $ are the matrices of coefficients for each lag,

$ \epsilon_t $ is the vector of error terms, which are assumed to follow a normal distribution with mean 0 and covariance matrix $ :nbsphinx-math:`Sigma `$.

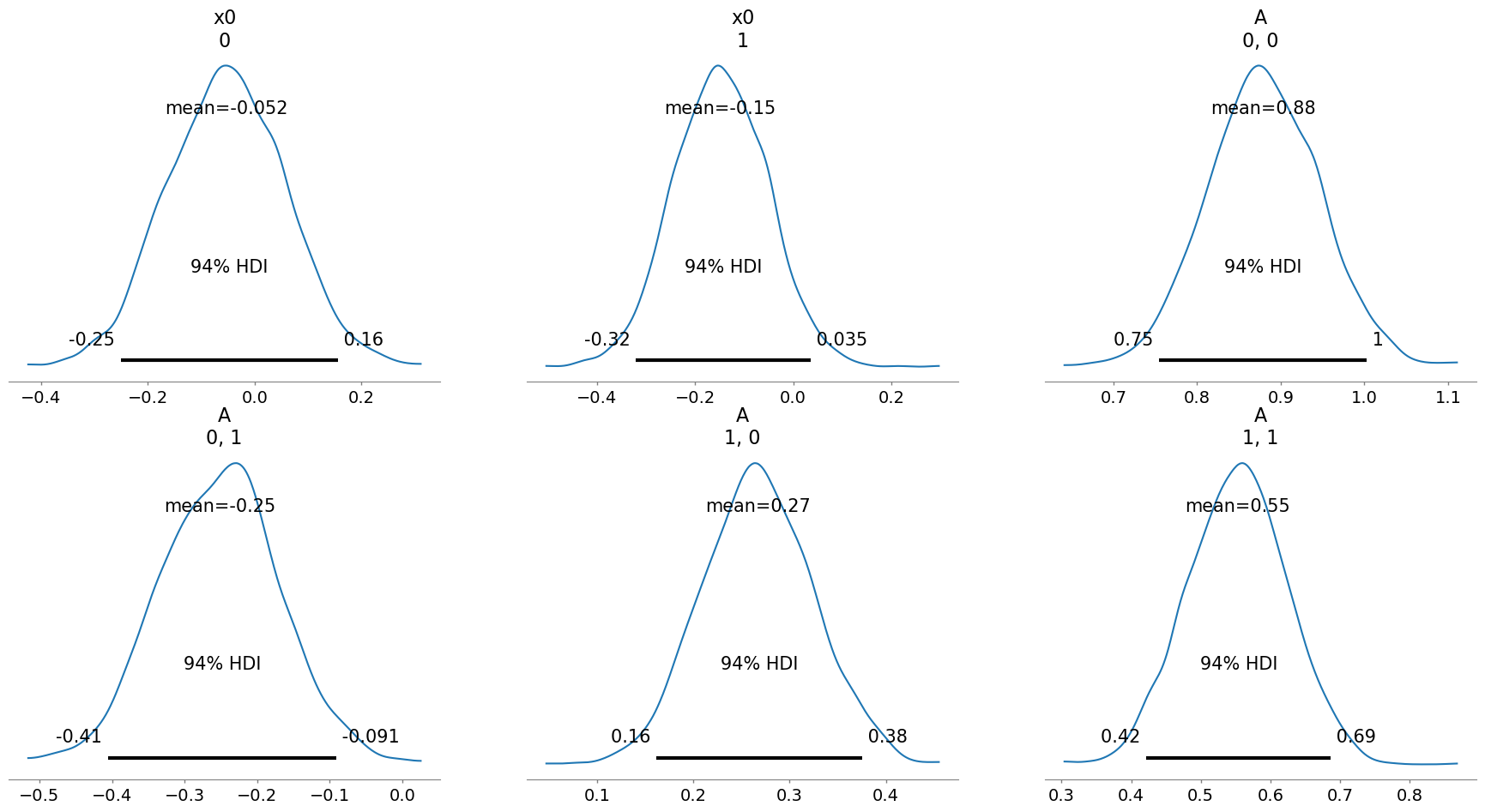

We will focus on a VAR(1) model. In the Bayesian setting, the focus shifts to estimating the posterior distributions of the coefficients $ A_1, A_2, \dots, A_p $ and the error covariance matrix $ :nbsphinx-math:`Sigma `$ given the observed data and prior distributions for these parameters.

Implementation in infer_VAR_bayes.py¶

A typical Bayesian VAR model implementation involves:

Defining Prior Distributions: Specifying prior distributions for the VAR model parameters based on previous studies, expert knowledge, or other relevant information.

Computing Posterior Distributions: Using Bayes’ theorem to update the prior distributions with information from the observed data, resulting in posterior distributions that reflect both the prior beliefs and the evidence from the data.

Parameter Estimation and Inference: Drawing samples from the posterior distributions of the model parameters to perform inference, estimate model dynamics, and make predictions.

Visualization and Analysis: Generating plots and summaries of the posterior distributions, predicted values, and other quantities of interest to analyze the model results.

Notebook Structure¶

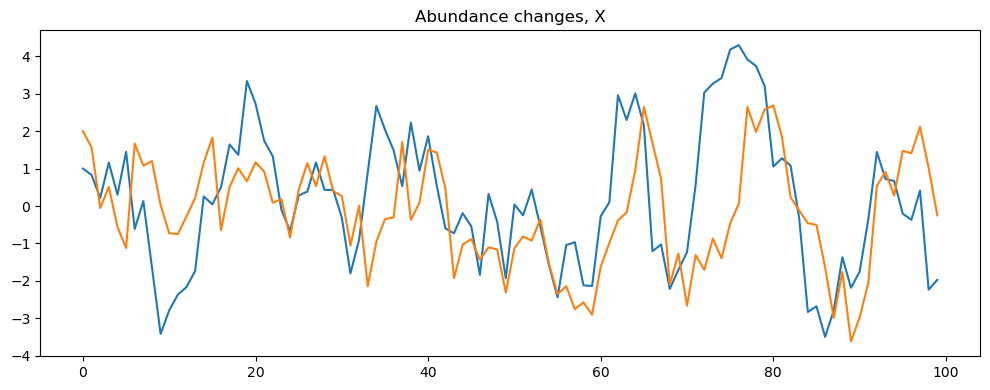

This notebook demonstrates how to run BVAR inference on simulated data using the infer_VAR class. We will explore different methodologies for importing data, estimating the posterior distributions of a VAR model, and visualizing the results to gain insights into the dynamics of the system.

Objective¶

Our goal is to infer the posterior distribution of a VAR model’s parameters using Bayesian methods. By employing the infer_VAR class, we aim to visualize the posterior distributions and leverage the plot_posteriors method to extract and understand the dynamics inherent in the VAR model process.

Example Usage¶

The first step is obtaining the data to be used in the analysis. In this example, we will simulate a VAR(1) process with 2 variables and 100 observations. We will then use the infer_VAR class to estimate the posterior distributions of the model parameters and visualize the results.

[1]:

from mimic.model_simulate.sim_VAR import *

from mimic.model_infer.infer_VAR_bayes import *

from mimic.utilities.utilities import read_parameters

WARNING (pytensor.tensor.blas): Using NumPy C-API based implementation for BLAS functions.

[2]:

# Import parameters from JSON file

simulator = sim_VAR()

simulator.read_parameters('./parameters.json')

simulator.simulate("VARsim")

[3]:

infer = infer_VAR(simulator.data)

infer.run_inference()

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [x0, A, noise_chol]

Sampling 2 chains for 1_000 tune and 2_000 draw iterations (2_000 + 4_000 draws total) took 13 seconds.

We recommend running at least 4 chains for robust computation of convergence diagnostics

Results saved as:

NetCDF file: model_posterior_default_v3.nc

Data file: data_default_v3.npz

[4]:

# save the simulated data on a file:

simulator.save_data('./simulated_data.csv')

[5]:

# Set up the inference by importing the simulation data from a file

# Construct the full path to the CSV file

csv_file_path = './simulated_data.csv'

# Create an instance of infer_VAR and import the data

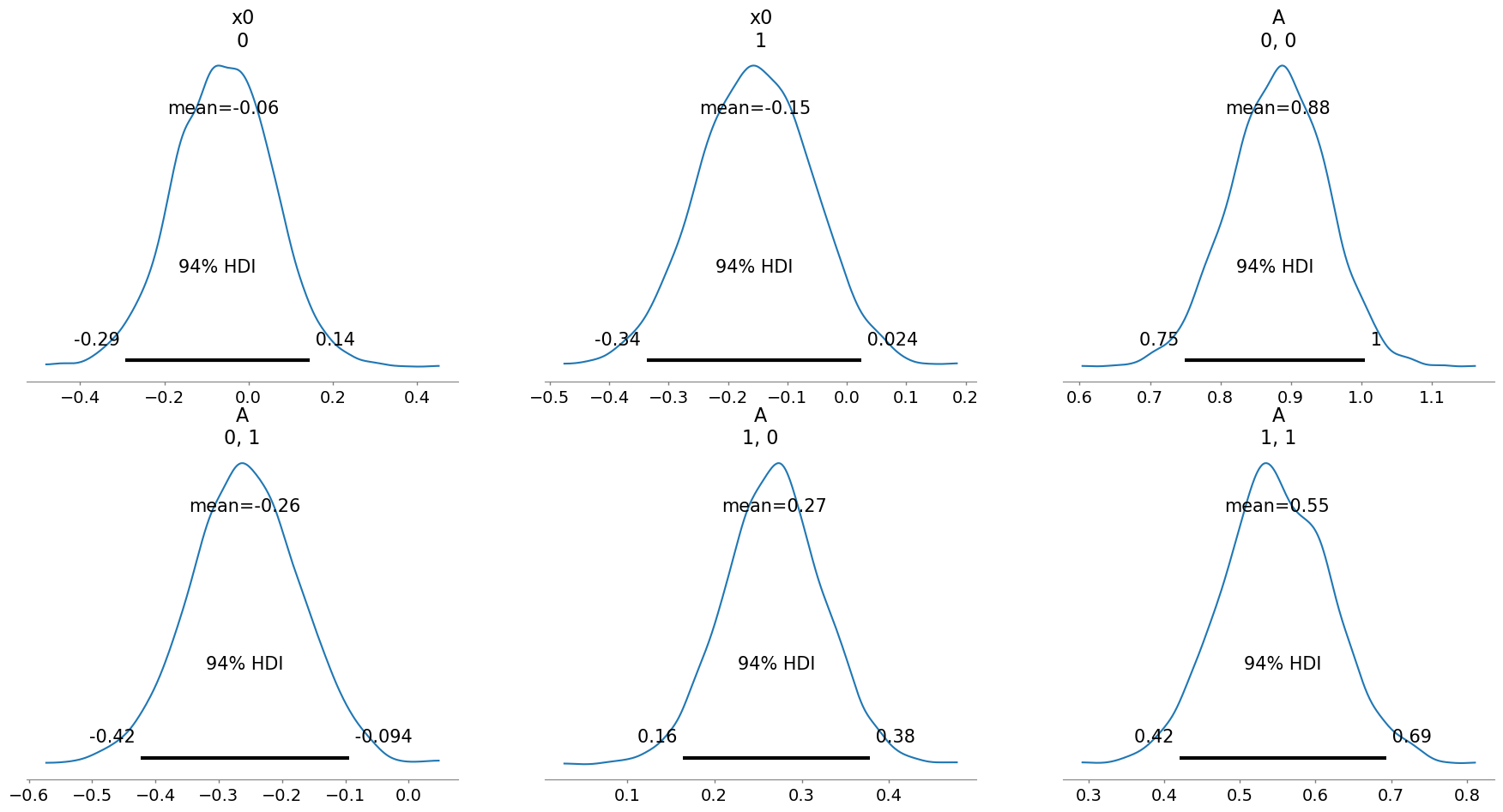

infer2 = infer_VAR(data=None)

infer2.import_data(csv_file_path)

[6]:

infer2.run_inference()

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [x0, A, noise_chol]

Sampling 2 chains for 1_000 tune and 2_000 draw iterations (2_000 + 4_000 draws total) took 13 seconds.

We recommend running at least 4 chains for robust computation of convergence diagnostics

Results saved as:

NetCDF file: model_posterior_default_v4.nc

Data file: data_default_v4.npz

[7]:

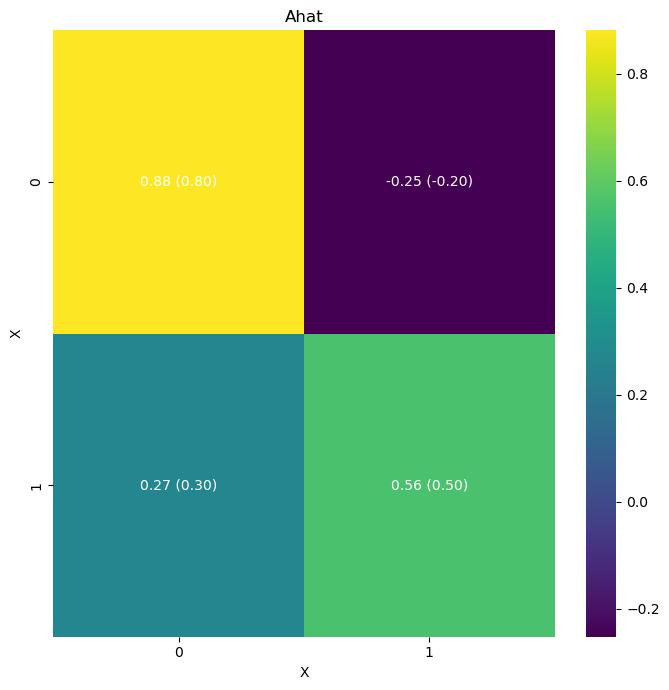

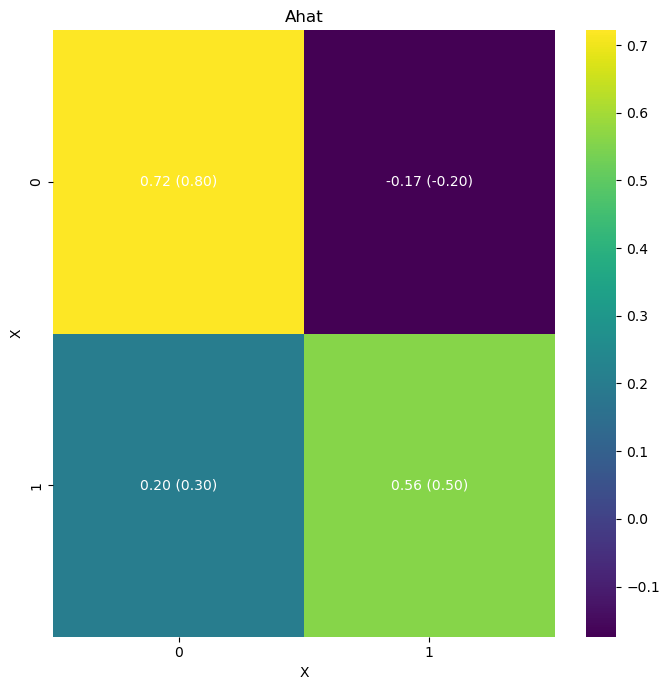

# Let's plot these results in a heatmap with the original coefficients (A) and the inferred coefficients (A_hat

A = np.array([[0.8, -0.2],

[0.3, 0.5]])

true_values = [A]

idata = az.from_netcdf('model_posterior_default_v1.nc')

infer2.plot_heatmap(

idata, matrices=["A"], true_values=true_values)

# infer2.posterior_analysis(A=A)

[8]:

# Set priors for the inference

infer3 = infer_VAR(simulator.data)

intercepts = np.array([0.0, 0.0])

coefficients = np.array([[0.5, 0.5],

[0.5, 0.5]])

covariance_matrix = np.eye(2)

# Use keyword arguments for set_parameters

infer3.set_parameters(

intercepts=intercepts,

coefficients=coefficients,

covariance_matrix=covariance_matrix

)

# Run the inference

infer3.run_inference()

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [x0, A]

Sampling 2 chains for 1_000 tune and 2_000 draw iterations (2_000 + 4_000 draws total) took 10 seconds.

We recommend running at least 4 chains for robust computation of convergence diagnostics

Results saved as:

NetCDF file: model_posterior_default_v5.nc

Data file: data_default_v5.npz

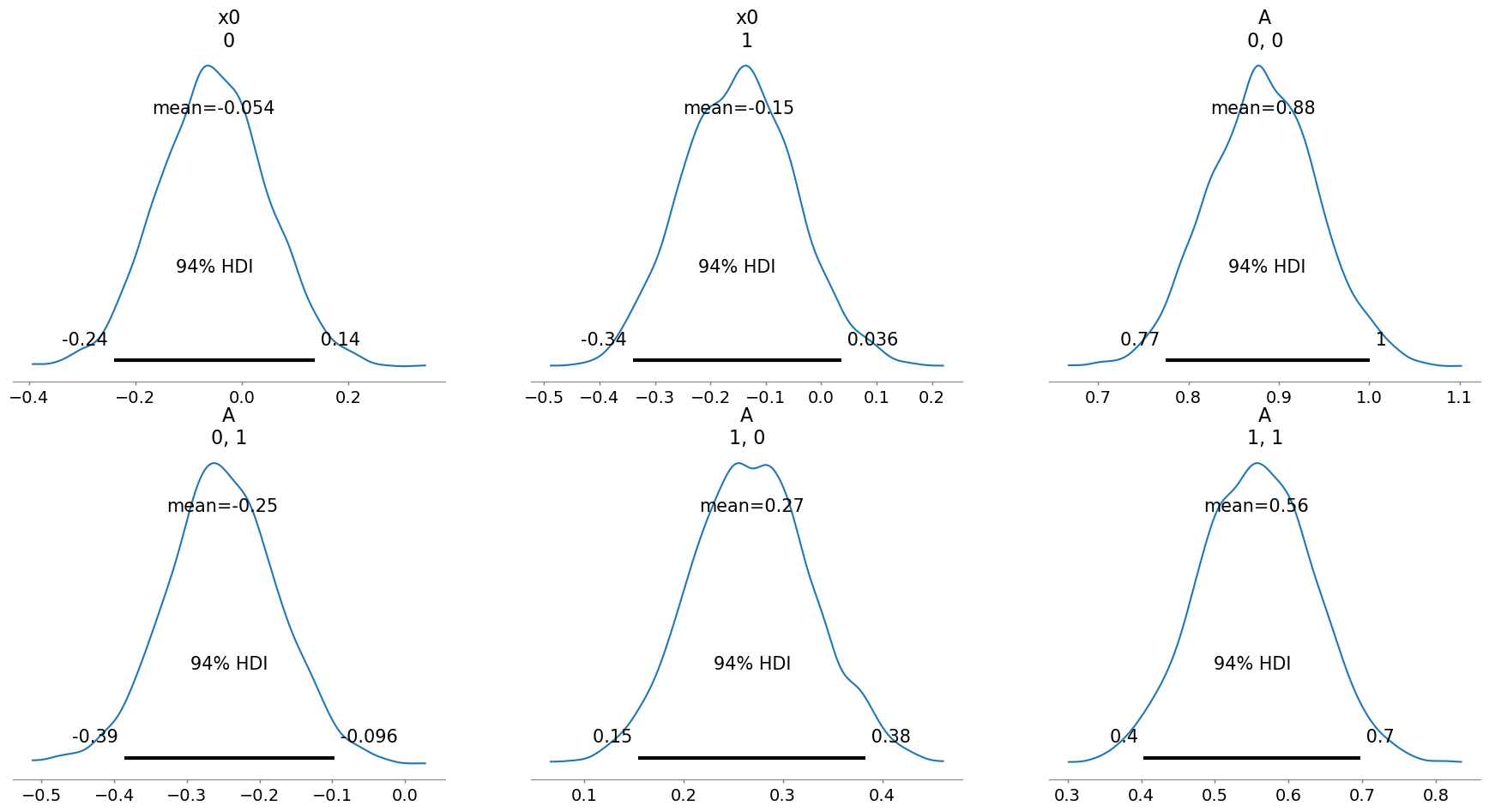

[10]:

infer3.posterior_analysis(A=A)